¶ Description

Use the XGBoost Library.

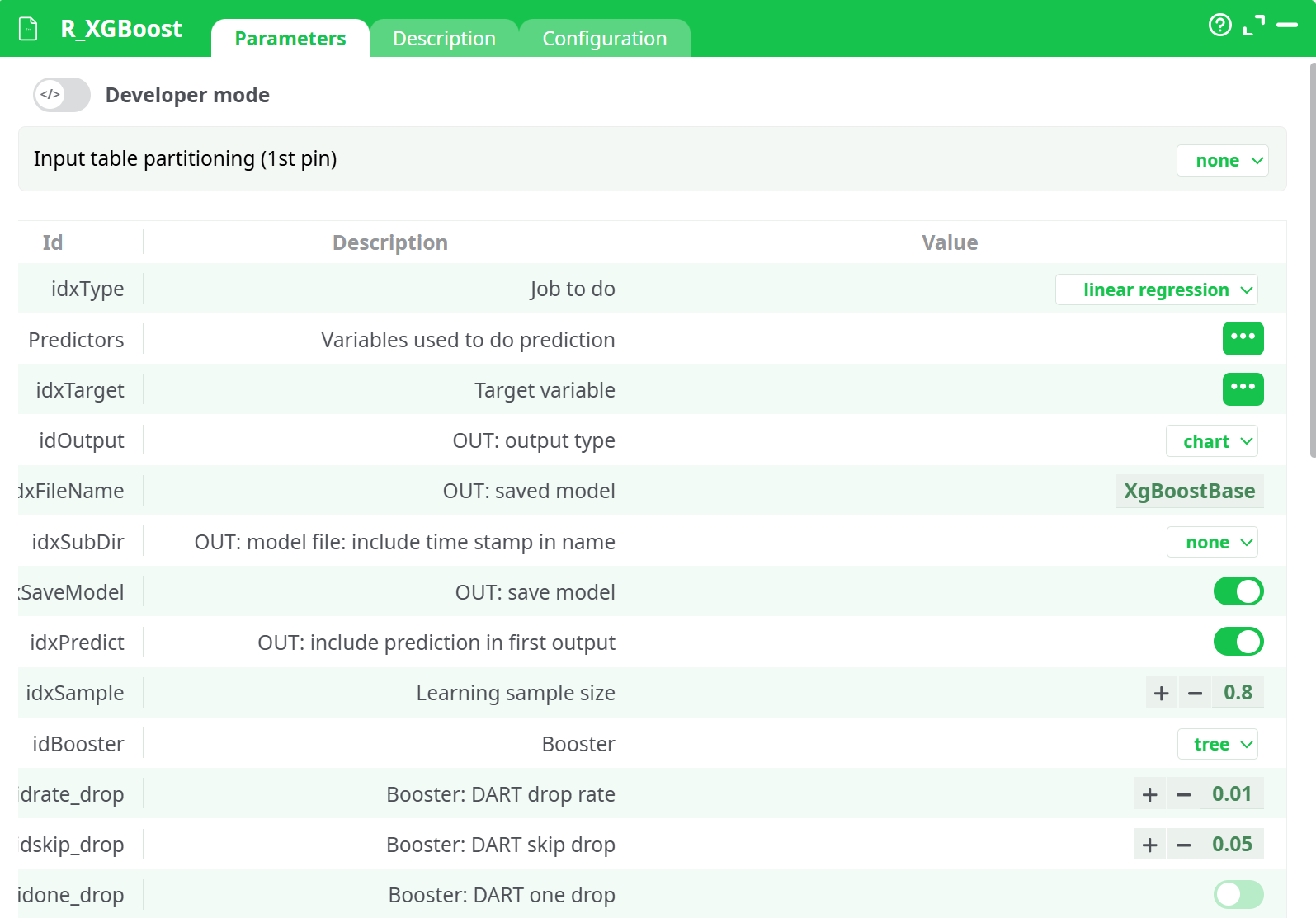

¶ Parameters

¶ Parameters tab

Parameters:

- Job to do

- Variables used to do prediction

- Target variable

- OUT: output type

- OUT: saved model

- OUT: model file: include time stamp in name

- OUT: save model

- OUT: include prediction in first output

- Learning sample size

- Booster

- Number of CPU to use (nthread)

- Verbosity (see training progress)

- Seed

- MOD: scale predictors (standardize)

- Cross validations (1=none)

- Number of passes on the data (nround)

- Hyperparameters tuning method

- Optimize penalization parameters

- HYP: alpha (L1)

- HYP: lambda (L2)

- Optimize other hyperparameters

- HYP: maximum depth of a tree (max.deph)

- HYP: min size to allow a split

- HYP: eta

- gamma

- SHAP: sample size

- SHAP: max number of features on plot

- PLOT: text color on ClassPlot

- PLOT: low color on ClassPlot

- PLOT: high color on ClassPlot

- PLOT: bar & area plot color

- PLOT: max columns for shap plots

- A short description

- A short description

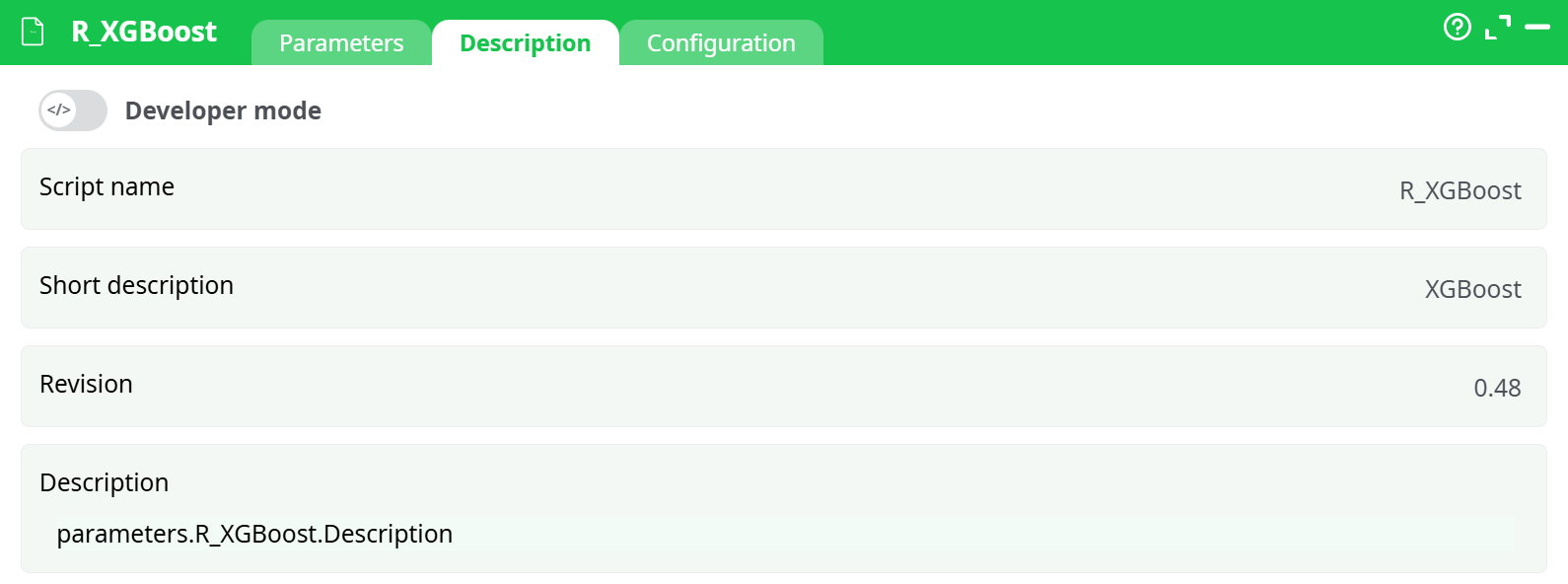

¶ Description tab

Parameters:

- Script name

- Short description

- Revision

- Decription

¶ Configuration tab

See dedicated page for more information.

¶ About

Gradiant Boosting is probably the most popular algorithm in this second decade of the 21st century. The main reason is that it performed extraordinarily well in most data mining competitions. It usually ensures one of the highest accuracy in situations where the learning and test datasets are from the same time frame.

In practice, we’ve seen those models degrade very quickly over time (in a banking setting, for example, the accuracy dropped 10 points below LASSO in just two months), so we tend not to use it.

The general idea of gradient boosting is to make ensemble modeling on steroid. By putting together hundreds or thousands of weak models we can obain a fairly good classifier. This is done at the cost of interpetability.

Fit a gradient Boosting model. The different operating modes are:

- linear regression

- logistic regression

- logistic regression for binary classification, output probability

- Multiple classification

- Multiple Probabilities

Note about ETA

XGBoost automatically does the hyperparameters optimization, but you are free to set the ETA to a lower value. ETA is the step size shrinkage (a bit similar to LASSO) used in update to prevents overfitting. After each boosting step, we can directly get the weights of new features, and it shrinks the feature weights to make the boosting process more conservative. The default value is 0.3. Lower values will take longer to compute and yield potentially overfitting models. A value of 1 will use a “naïve gradient boosting” algorithm.